Graphs & Trees & Heaps

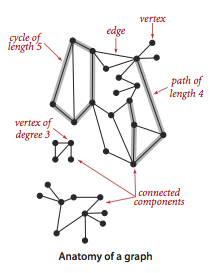

- A graph is a set of vertices and a collection of edges that each connect a pair of vertices.

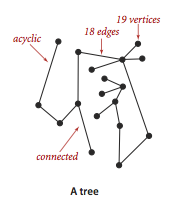

- A tree is an acyclic connected graph. A disjoint set of trees is called a forest.

- A spanning tree of a connected graph is a subgraph that contains all of that graph’s vertices and is a single tree.

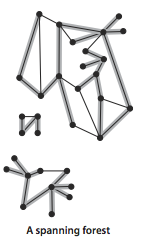

- A spanning forest of a graph is the union of spanning trees of its connected components.

- A tree is a data structure composed of nodes: A root node, and has zero or more child nodes. Each child node has zero or more child nodes.

- A binary tree is a tree in which each node has up to two children. A binary search tree is a binary tree which every node fits a specific ordering property: All left descendents <= n < all right descendents.

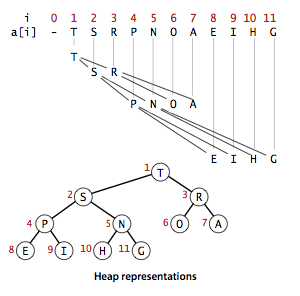

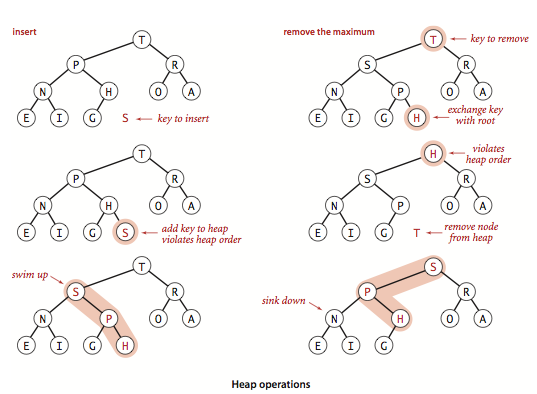

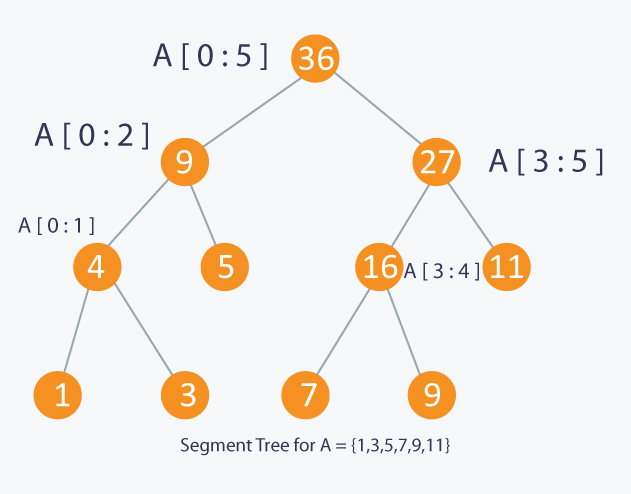

- A binary heap (min-heap or max-heap) is a complete binary tree, takes O(n) to build a heap. insertion and deletion will both take O(log(n)) time.

- A trie (prefix tree) is a variant of a binary tree (R-way tree) in which characters are stored at each node. Each path down the tree may represent a word. Tries Searching

|

|

|

Graph Search

- The two most common ways to search a graph are depth-first search (DFS) and breadth-first search (BFS). DFS is often preferred if we want to visit every node in the graph. BFS is generally better if we want to find the shortest path (or just any path).

- Bidirectional search is used to find the shortest path between a source and destination node. It operates by essentially running two simultaneous BFS, one from each node. When their searches collide, we have found a path. The complexity reduces from O(k^d) to O(k^(d/2)).

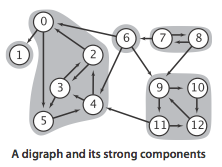

- A directed graph (or digraph) is a set of vertices and a collection of directed edges that each connects an ordered pair of vertices.

- A directed acyclic graph (or DAG) is a digraph with no directed cycles.

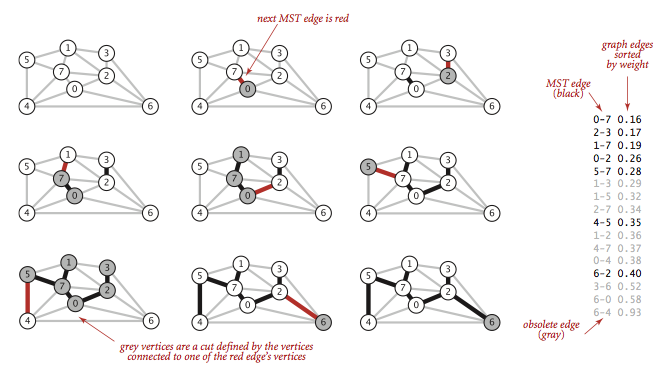

- A minimum spanning tree (MST) of an edge-weighted graph is a spanning tree whose weight (the sum of the weights of its edges) is no larger than the weight of any other spanning tree. Prim’s or Kruskal’s algorithm computes the MST of any connected edge-weighted graph.

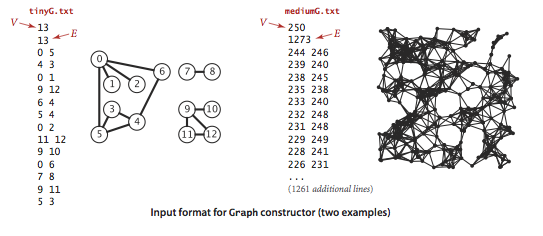

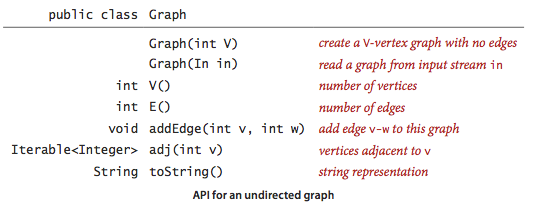

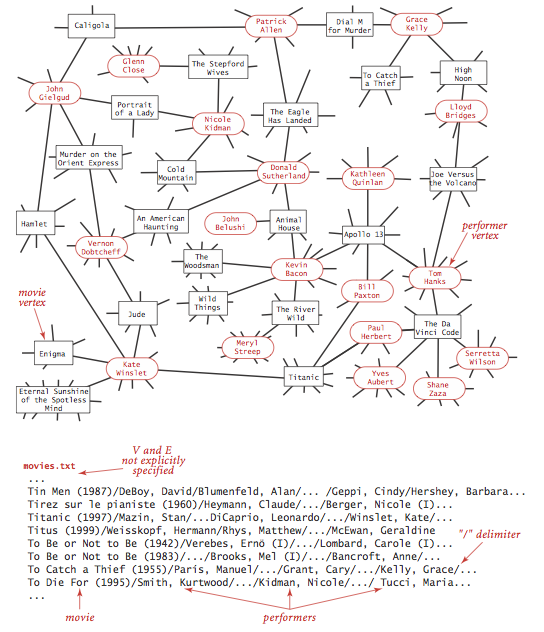

Construct Graph

We use the adjacency-lists representation, where we maintain a vertex-indexed array of lists of the vertices connected by an each to each vertex.

|

|

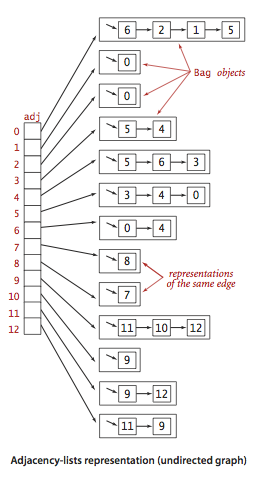

Construct Symbol Graph

Typical applications involve processing graphs using strings, not integer indices, to define and refer to vertices. We can use following data structure:

- A map table with String keys (vertex names) and int values (indices).

- An array keys[] that serves as an inverted index, giving the vertex name tied with each integer index.

- A Graph built using the indices to refer to vertices.

public class SymbolGraph {

private final String NEW_LINE = System.getProperty("line.separator");

private final int numVertices;

private int numEdges;

private Map<String, Integer> map; // key -> index

private String[] keys; // index -> key, inverted array

private List<List<Integer>> adjacents;

public SymbolGraph(String filename, String delimiter) {

map = new HashMap<String, Integer>();

// First pass builds the index by reading strings to associate

// distinct strings with an index

try {

File file = new File(filename);

FileInputStream stream = new FileInputStream(file);

Scanner scanner = new Scanner(new BufferedInputStream(stream));

// first pass builds the index by reading vertex names

while (scanner.hasNextLine()) {

String[] a = scanner.nextLine().split(delimiter);

for (String value : a) {

if (!map.containsKey(value))

map.put(value, map.size());

}

}

// inverted index to get string keys in an array

keys = new String[map.size()];

for (String name : map.keySet()) {

keys[map.get(name)] = name;

}

numVertices = map.size();

// second pass builds the graph by connecting first vertex on each

// line to all others

this.numEdges = 0;

this.adjacents = new ArrayList<>(numVertices);

for (int v = 0; v < numVertices; v++) {

adjacents.add(new ArrayList<Integer>());

}

stream = new FileInputStream(file);

scanner = new Scanner(new BufferedInputStream(stream));

while (scanner.hasNextLine()) {

String[] a = scanner.nextLine().split(delimiter);

int v = map.get(a[0]);

for (int i = 1; i < a.length; i++) {

int w = map.get(a[i]);

addEdge(v, w);

}

}

scanner.close();

} catch (Exception e) {

throw new IllegalArgumentException("Could not read " + filename, e);

}

}

public boolean contains(String key) {

return map.containsKey(key);

}

public int indexOf(String key) {

return map.get(key);

}

public String nameOf(int vertex) {

validateVertex(vertex);

return keys[vertex];

}

public int numVertices() {

return numVertices;

}

public int numEdges() {

return numEdges;

}

public void addEdge(int v, int w) {

validateVertex(v);

validateVertex(w);

numEdges++;

adjacents.get(v).add(w);

adjacents.get(w).add(v);

}

public Iterable<Integer> adjacents(int v) {

validateVertex(v);

return adjacents.get(v);

}

public int degree(int v) {

validateVertex(v);

return adjacents.get(v).size();

}

private void validateVertex(int vertex) {

if (vertex < 0 || vertex >= numVertices)

throw new IllegalArgumentException("vertex " + vertex + " is not between 0 and " + (numVertices - 1));

}

public String toString() {

StringBuilder s = new StringBuilder();

s.append(numVertices + " vertices, " + numEdges + " edges " + NEW_LINE);

for (int v = 0; v < numVertices; v++) {

s.append(v + ": ");

for (int w : adjacents.get(v)) {

s.append(w + " ");

}

s.append(NEW_LINE);

}

return s.toString();

}

}

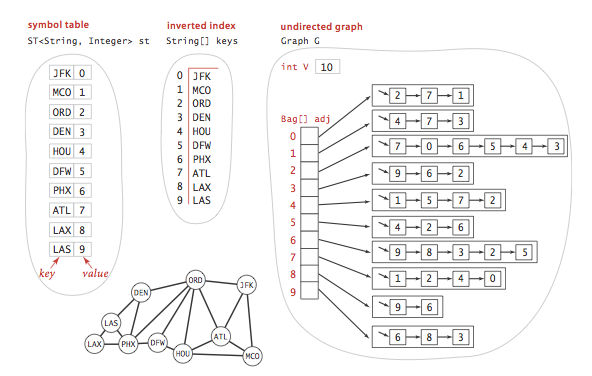

Degrees of Separation

One of the classic applications of graph processing is to find the degree of separation between two individuals in a social network.

We will take the movies.txt data to demonstrate the SymbolGraph and BreadFirstPaths to find shortest paths in graphs.

public class DegreesOfSeparation {

private static final int INFINITY = Integer.MAX_VALUE;

private boolean[] visited; // marked[v] = is there an s-v path

private int[] edgeTo; // edgeTo[v] = previous edge on shortest s-v path

private int[] indegrees; // indegrees[v] = number of edges shortest s-v path

public DegreesOfSeparation(SymbolGraph graph, int source) {

visited = new boolean[graph.numVertices()];

indegrees = new int[graph.numVertices()];

edgeTo = new int[graph.numVertices()];

validateVertex(source);

bfs(graph, source);

}

private void bfs(SymbolGraph graph, int source) {

Queue<Integer> queque = new LinkedList<Integer>();

for (int v = 0; v < graph.numVertices(); v++)

indegrees[v] = INFINITY;

indegrees[source] = 0;

visited[source] = true;

queque.offer(source);

while (!queque.isEmpty()) {

int v = queque.poll();

for (int w : graph.adjacents(v)) {

if (!visited[w]) {

edgeTo[w] = v;

indegrees[w] = indegrees[v] + 1;

visited[w] = true;

queque.offer(w);

}

}

}

}

public boolean hasPathTo(int v) {

validateVertex(v);

return visited[v];

}

/**

* Returns the number of edges in a shortest path between the source and target

*/

public int indegress(int vertex) {

validateVertex(vertex);

return indegrees[vertex];

}

public Iterable<Integer> pathTo(int vertex) {

validateVertex(vertex);

if (!hasPathTo(vertex))

return null;

Stack<Integer> path = new Stack<Integer>();

int x;

for (x = vertex; indegrees[x] != 0; x = edgeTo[x])

path.push(x);

path.push(x);

return path;

}

// throw an IllegalArgumentException unless {@code 0 <= v < V}

private void validateVertex(int vertex) {

int V = visited.length;

if (vertex < 0 || vertex >= V)

throw new IllegalArgumentException("vertex " + vertex + " is not between 0 and " + (V - 1));

}

public String getPathToTarget(SymbolGraph sg, String target) {

StringBuilder builder = new StringBuilder();

if (sg.contains(target)) {

int t = sg.indexOf(target);

if (hasPathTo(t)) {

for (int v : pathTo(t)) {

if (builder.length() > 0)

builder.append(" -> ");

builder.append(sg.nameOf(v));

}

} else {

builder.append(target).append(" not connected.");

}

} else {

builder.append(target).append(" not in database.");

}

return builder.toString();

}

public static void main(String[] args) {

SymbolGraph graph = new SymbolGraph("data/movies.txt", "/");

String sourceName = "Bacon, Kevin";

if (!graph.contains(sourceName)) {

System.out.println(sourceName + " not in database.");

return;

}

int source = graph.indexOf(sourceName);

DegreesOfSeparation bfs = new DegreesOfSeparation(graph, source);

String target = "Kidman, Nicole";

String result = bfs.getPathToTarget(graph, target);

String answer = "Kidman, Nicole -> Cold Mountain (2003) -> Sutherland, Donald (I) -> Animal House (1978) -> Bacon, Kevin";

assert result.equals(answer);

target = "Grant, Cary";

result = bfs.getPathToTarget(graph, target);

answer = "Grant, Cary -> Charade (1963) -> Matthau, Walter -> JFK (1991) -> Bacon, Kevin";

assert result.equals(answer);

target = "Richie, Chen";

result = bfs.getPathToTarget(graph, target);

answer = "Richie, Chen not in database.";

assert result.equals(answer);

}

}

Construct Digraph

|

|

Compute MST

Kruskal’s algorithm processes the edges in order of their weight values (smallest to largest), taking for the MST (coloring black) each edge that does not form a cycle with edges previously added, stopping after adding V-1 edges. The black edges form a forest of trees that evolves gradually into a single tree, the MST (Minimum Spanning Tree).

This implementation of Kruskal’s algorithm uses a queue to hold MST edges, a priority queue to hold edges not yet examined, and a union-find data structure for identifying ineligible edges.

public class KruskalMST {

private Queue<Edge> mst;

public KruskalMST(EdgeWeightedGraph G) {

mst = new Queue<Edge>();

MinPQ<Edge> pq = new MinPQ<Edge>();

for (Edge e : G.edges) {

pq.insert(e);

}

UionFind uf = new UF(G.V());

while (!pq.isEmpty() && mst.size() < G.V() - 1) {

Edge e = pq.delMin();

int v = e.either(), w = e.other(v);

if (uf.connected(v, w)) continue;

uf.union(v, w);

mst.enqueue(e);

}

}

}

public class UnionFind {

private int[] parent; // parent[i] = parent of i

private byte[] rank; // rank[i] = rank of subtree rooted at i (never more than 31)

private int count; // number of components

public UnionFind(int n) {

if (n < 0)

throw new IllegalArgumentException();

count = n;

parent = new int[n];

rank = new byte[n];

for (int i = 0; i < n; i++) {

parent[i] = i;

rank[i] = 0;

}

}

public int find(int p) {

validate(p);

while (p != parent[p]) {

parent[p] = parent[parent[p]]; // path compression by halving

p = parent[p];

}

return p;

}

public int count() {

return count;

}

public boolean connected(int p, int q) {

return find(p) == find(q);

}

public void union(int p, int q) {

int rootP = find(p);

int rootQ = find(q);

if (rootP == rootQ)

return;

if (rank[rootP] < rank[rootQ])

parent[rootP] = rootQ;

else if (rank[rootP] > rank[rootQ])

parent[rootQ] = rootP;

else {

parent[rootQ] = rootP;

rank[rootP]++;

}

count--;

}

private void validate(int p) {

if (p < 0 || p >= parent.length)

throw new IllegalArgumentException();

}

}

Shortest Paths

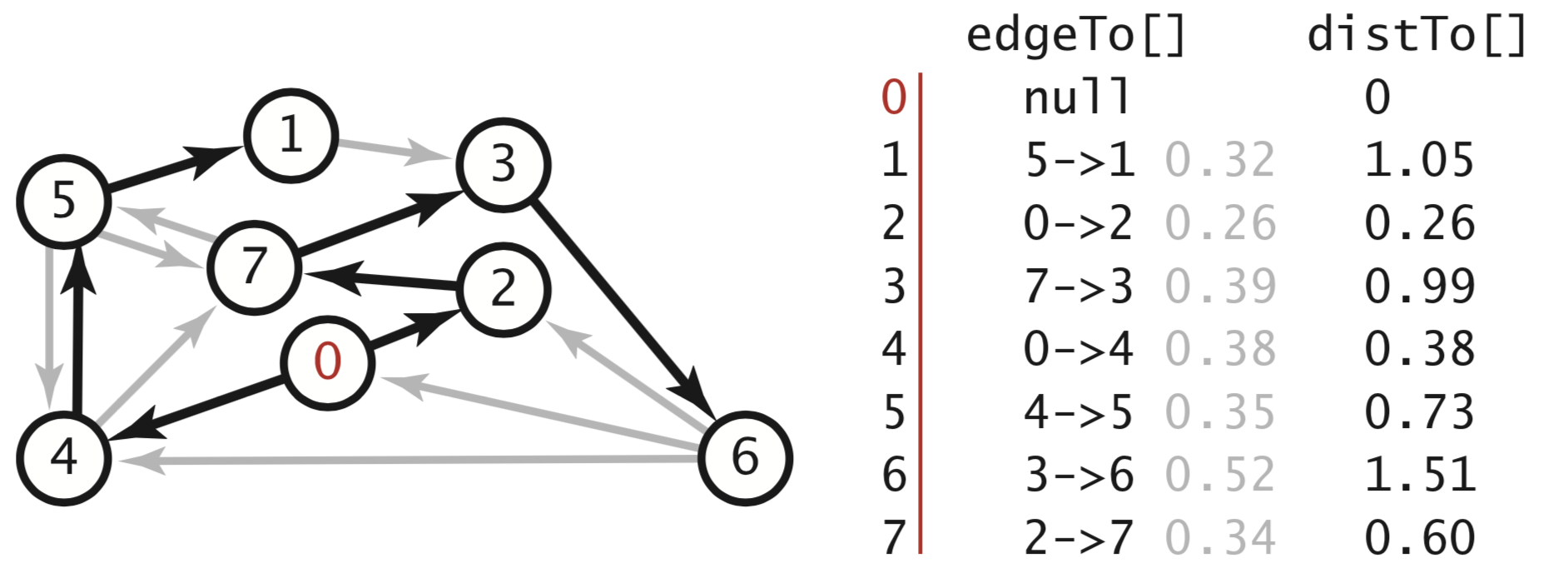

- A shortest path from vertex s to vertex t is a directed path in a edge-weighted digraph, that no other path has a lower weight. The implementation is based on edge relaxation. The table below summarizes the 3 shortest-paths algorithms.

| algorithm | restriction | typical | worst-case | extra space | sweet spot |

|---|---|---|---|---|---|

| Dijkstra (eager) | positive edge weights | \(E\log V\) | \(E\log V\) | \(V\) | worst-case guarantee |

| topological order | edge-weighted DAGs | \(E + V\) | \(E + V\) | \(V\) | optimal for acyclic |

| Bellman-Ford (queue-based) | no negative cycles | \(E + V\) | \(EV\) | \(V\) | widely applicable |

- Dijkstra’s algorithm solves the single-source shortest-paths problem in edge-weighted digraphs with nonnegative weights using extra space proportional to V and time proportional to E log V (in the worst case). It initializes dist[s] to 0 and all other distTo[] entries to positive infinity. Then, repeatedly relaxes and adds to the tree a non-tree vertex with the lowest distTo[] value, continuing until all vertices are on the tree or no non-tree vertex has a finite distTo[] value. We add a priority queue to keep track of vertices that are candidates for being the next to be relaxed. The visited[] array is not needed, because the lens[] or distTo[] is MAX_VALUE or POSITIVE_INFINITY.

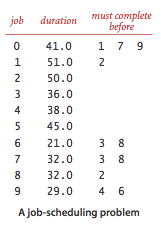

- By relaxing vertices in topological order, we can solve the single-source shortest-paths problem for acyclic edge-weighted digraph (called edge-weighted DAG) in time proportional to E + V. It handles negative edge weights. Also, we can solve the single-source longest paths problems in edge-weighted DAGs by initializing the distTo[] values to negative infinity and switching the sense of the inequality in relax(); Or another way is to create a copy of the given edge-weighted DAG that all edge weights are negated, then just need to find the shortest path.

public AcyclicSP(EdgeWeightedDigraph G, int s) {

distTo = new double[G.V()];

edgeTo = new DirectedEdge[G.V()];

for (int v = 0; v < G.V(); v++)

distTo[v] = Double.POSITIVE_INFINITY;

distTo[s] = 0.0;

// visit vertices in topological order

Topological topological = new Topological(G);

if (!topological.hasOrder())

throw new IllegalArgumentException("Digraph is not acyclic.");

for (int v : topological.order()) {

for (DirectedEdge e : G.adj(v))

relax(e);

}

}

// relax edge e

private void relax(DirectedEdge e) {

int v = e.from(), w = e.to();

if (distTo[w] > distTo[v] + e.weight()) {

distTo[w] = distTo[v] + e.weight();

edgeTo[w] = e;

}

}

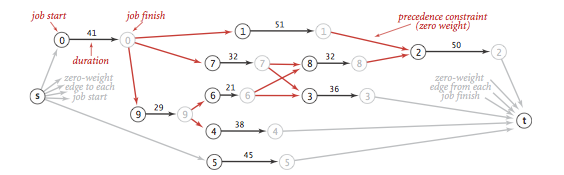

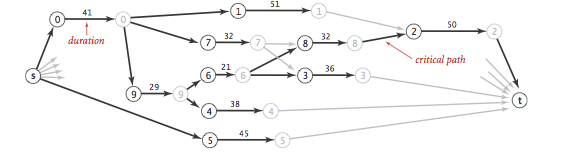

- The critical path method for parallel scheduling jobs is to proceed as follows:

|

|

public static void main(String[] args) {

// number of jobs

int n = StdIn.readInt();

// start and end

int start = 2 * n;

int end = 2 * n + 1;

// build network

EdgeWeightedDigraph G = new EdgeWeightedDigraph(2 * n + 2);

for (int i = 0; i < n; i++) {

double duration = StdIn.readDouble();

G.addEdge(new DirectedEdge(start, i, 0.0));

G.addEdge(new DirectedEdge(i + n, end, 0.0));

G.addEdge(new DirectedEdge(i, i + n, duration));

// precedence constraints

int m = StdIn.readInt();

for (int j = 0; j < m; j++) {

int precedent = StdIn.readInt();

G.addEdge(new DirectedEdge(n + i, precedent, 0.0));

}

}

// compute longest path

AcyclicLP lp = new AcyclicLP(G, start);

}

- Shortest paths in general edge-weighted digraphs. A negative cycle is a directed cycle whose total weight (sum of the weights of its edges) is negative. The concept of a shortest path is meaningless if there is a negative cycle. Bellman-Ford algorithm with negative cycle detection can solve this problem.

- Queue-based Bellman-Ford algorithm is an effective and efficient method for solving the shortest paths problem even for the case when edge weights are negative.

public BellmanFordSP(EdgeWeightedDigraph G, int s) {

distTo = new double[G.V()];

edgeTo = new DirectedEdge[G.V()];

onQueue = new boolean[G.V()];

for (int v = 0; v < G.V(); v++)

distTo[v] = Double.POSITIVE_INFINITY;

distTo[s] = 0.0;

queue = new Queue<Integer>();

queue.enqueue(s);

onQueue[s] = true;

while (!queue.isEmpty() && !hasNegativeCycle()) {

int v = queue.dequeue();

onQueue[v] = false;

relax(G, v);

}

assert check(G, s);

}

// relax vertex v and put other endpoints on queue if changed

private void relax(EdgeWeightedDigraph G, int v) {

for (DirectedEdge e : G.adj(v)) {

int w = e.to();

if (distTo[w] > distTo[v] + e.weight()) {

distTo[w] = distTo[v] + e.weight();

edgeTo[w] = e;

if (!onQueue[w]) {

queue.enqueue(w);

onQueue[w] = true;

}

}

// check negative cycle after done V times calls/relaxes

if (cost++ % G.V() == 0) {

findNegativeCycle();

if (hasNegativeCycle()) return; // found a negative cycle

}

}

}

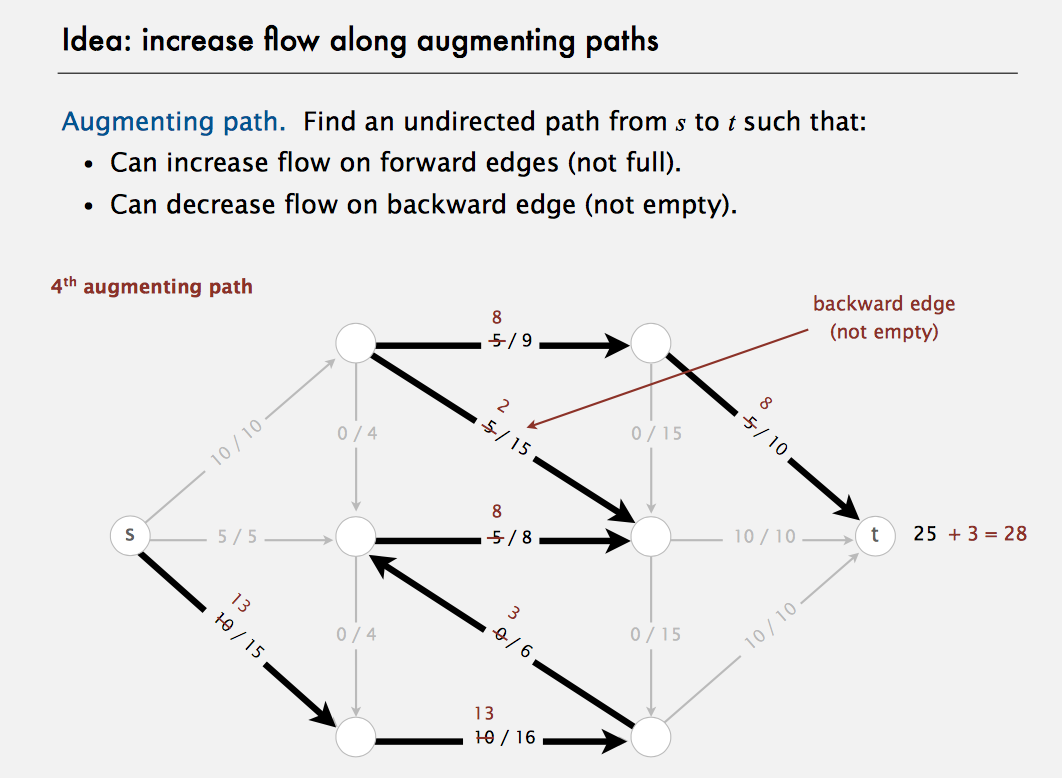

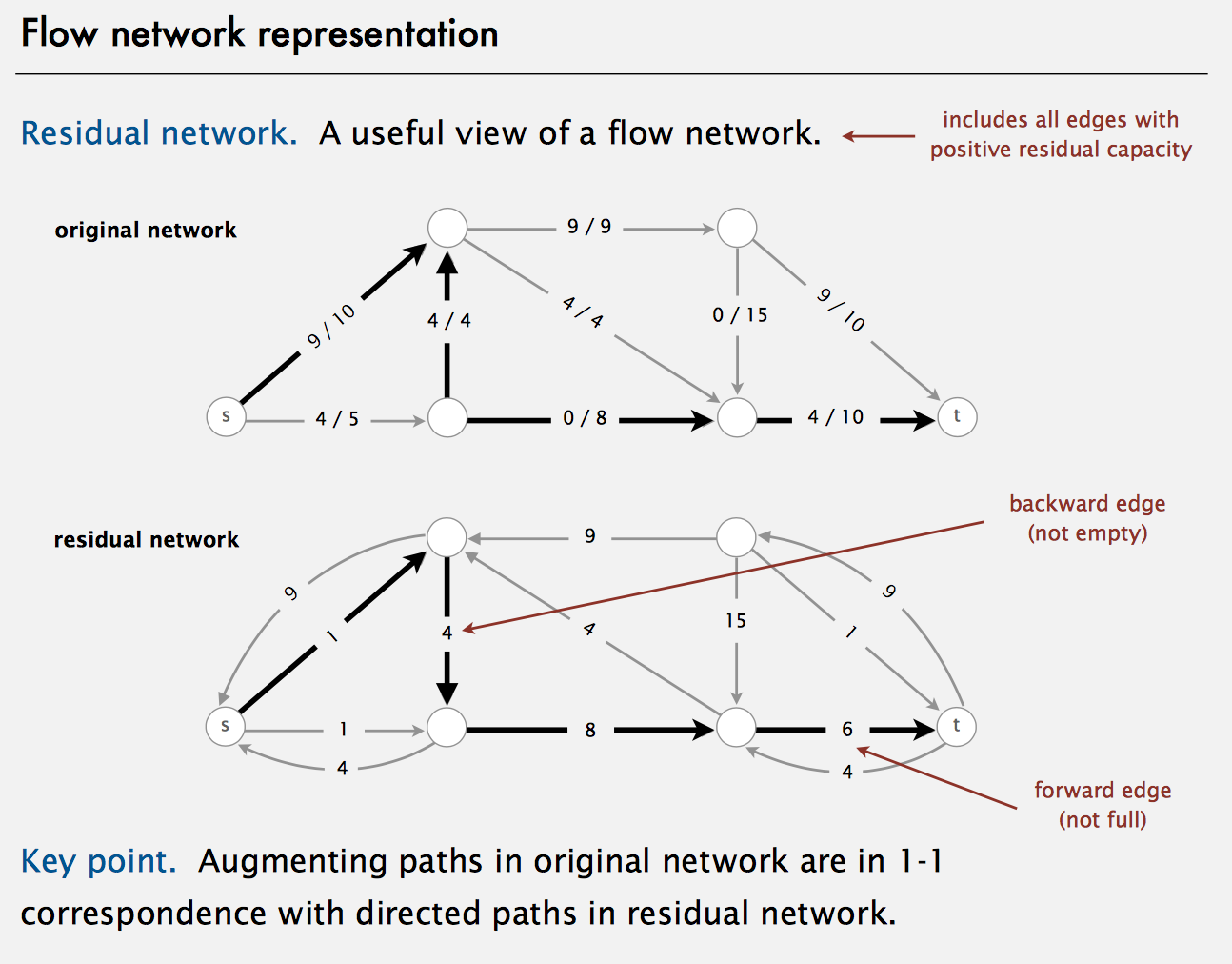

Maximum Flow

An st-flow (flow) is an assignment of values to the edges such that:

- Capacity constraint: 0 ≤ edge’s flow ≤ edge’s capacity.

- Local equilibrium: inflow = outflow at every vertex (except s and t).

Ford-Fulkerson algorithm:

- Start with 0 flow.

While there exists an augmenting path:

- find an augmenting path

- compute bottleneck capacity

- increase flow on that path by bottleneck capacity

The shortest-augmenting-path implementation of the Ford-Fulkerson maxflow algorithm takes time proportional to EV(E+V) in the worst case. Bread-first search examines at most E edges and V vertices.

public class FordFulkerson {

private static final double FLOATING_POINT_EPSILON = 1E-11;

private final int V; // number of vertices

private boolean[] marked; // marked[v] = true if s->v path in residual graph

private FlowEdge[] edgeTo; // edgeTo[v] = last edge on shortest residual s->v path

private double value; // current value of max flow

/**

* Compute a maximum flow and minimum cut in the network {@code G} from vertex {@code s} to

* vertex {@code t}.

*/

public FordFulkerson(FlowNetwork G, int s, int t) {

V = G.V();

validate(s);

validate(t);

if (s == t)

throw new IllegalArgumentException("Source equals sink");

if (!isFeasible(G, s, t))

throw new IllegalArgumentException("Initial flow is infeasible");

// while there exists an augmenting path, use it

value = excess(G, t);

while (hasAugmentingPath(G, s, t)) {

// compute bottleneck capacity

double bottle = Double.POSITIVE_INFINITY;

for (int v = t; v != s; v = edgeTo[v].other(v)) {

bottle = Math.min(bottle, edgeTo[v].residualCapacityTo(v));

}

// augment flow

for (int v = t; v != s; v = edgeTo[v].other(v)) {

edgeTo[v].addResidualFlowTo(v, bottle);

}

value += bottle;

}

}

public double value() {

return value;

}

public boolean inCut(int v) {

validate(v);

return marked[v];

}

private void validate(int v) {

if (v < 0 || v >= V)

throw new IllegalArgumentException("vertex " + v + " is not between 0 and " + (V - 1));

}

// is there an augmenting path?

// if so, upon termination edgeTo[] will contain a parent-link representation of such a path

// this implementation finds a shortest augmenting path (fewest number of edges),

// which performs well both in theory and in practice

private boolean hasAugmentingPath(FlowNetwork G, int s, int t) {

edgeTo = new FlowEdge[G.V()];

marked = new boolean[G.V()];

// breadth-first search

Queue<Integer> queue = new LinkedList<Integer>();

queue.offer(s);

marked[s] = true;

while (!queue.isEmpty() && !marked[t]) {

int v = queue.poll();

for (FlowEdge e : G.adj(v)) {

int w = e.other(v);

// if residual capacity from v to w

if (e.residualCapacityTo(w) > 0) {

if (!marked[w]) {

edgeTo[w] = e;

marked[w] = true;

queue.offer(w);

}

}

}

}

// is there an augmenting path?

return marked[t];

}

// return excess flow at vertex v

private double excess(FlowNetwork G, int v) {

double excess = 0.0;

for (FlowEdge e : G.adj(v)) {

if (v == e.from())

excess -= e.flow();

else

excess += e.flow();

}

return excess;

}

// return excess flow at vertex v

private boolean isFeasible(FlowNetwork G, int s, int t) {

// check that capacity constraints are satisfied

for (int v = 0; v < G.V(); v++) {

for (FlowEdge e : G.adj(v)) {

if (e.flow() < -FLOATING_POINT_EPSILON || e.flow() > e.capacity() + FLOATING_POINT_EPSILON) {

System.err.println("Edge does not satisfy capacity constraints: " + e);

return false;

}

}

}

// check that net flow into a vertex equals zero, except at source and sink

if (Math.abs(value + excess(G, s)) > FLOATING_POINT_EPSILON) {

System.err.println("Excess at source = " + excess(G, s));

System.err.println("Max flow = " + value);

return false;

}

if (Math.abs(value - excess(G, t)) > FLOATING_POINT_EPSILON) {

System.err.println("Excess at sink = " + excess(G, t));

System.err.println("Max flow = " + value);

return false;

}

for (int v = 0; v < G.V(); v++) {

if (v == s || v == t)

continue;

else if (Math.abs(excess(G, v)) > FLOATING_POINT_EPSILON) {

System.err.println("Net flow out of " + v + " doesn't equal zero");

return false;

}

}

return true;

}

public class FlowNetwork {

private final int V;

private int E;

private Bag<FlowEdge>[] adj;

/**

* Initializes an empty flow network with {@code V} vertices and 0 edges.

*/

public FlowNetwork(int V) {

if (V < 0)

throw new IllegalArgumentException("Number of vertices in a Graph must be nonnegative");

this.V = V;

this.E = 0;

adj = (Bag<FlowEdge>[]) new Bag[V];

for (int v = 0; v < V; v++)

adj[v] = new Bag<FlowEdge>();

}

public int V() {

return V;

}

public int E() {

return E;

}

private void validateVertex(int v) {

if (v < 0 || v >= V)

throw new IllegalArgumentException("vertex " + v + " is not between 0 and " + (V - 1));

}

public void addEdge(FlowEdge e) {

int v = e.from();

int w = e.to();

validateVertex(v);

validateVertex(w);

adj[v].add(e);

adj[w].add(e);

E++;

}

public Iterable<FlowEdge> adj(int v) {

validateVertex(v);

return adj[v];

}

// return list of all edges - excludes self loops

public Iterable<FlowEdge> edges() {

Bag<FlowEdge> list = new Bag<FlowEdge>();

for (int v = 0; v < V; v++)

for (FlowEdge e : adj(v)) {

if (e.to() != v)

list.add(e);

}

return list;

}

}

class FlowEdge {

// to deal with floating-point roundoff errors

private static final double FLOATING_POINT_EPSILON = 1E-10;

private final int v; // from

private final int w; // to

private final double capacity; // capacity

private double flow; // flow

public FlowEdge(int v, int w, double capacity) {

if (v < 0)

throw new IllegalArgumentException("vertex index must be a non-negative integer");

if (w < 0)

throw new IllegalArgumentException("vertex index must be a non-negative integer");

if (!(capacity >= 0.0))

throw new IllegalArgumentException("Edge capacity must be non-negative");

this.v = v;

this.w = w;

this.capacity = capacity;

this.flow = 0.0;

}

public FlowEdge(FlowEdge e) {

this.v = e.v;

this.w = e.w;

this.capacity = e.capacity;

this.flow = e.flow;

}

public int from() {

return v;

}

public int to() {

return w;

}

public double capacity() {

return capacity;

}

public double flow() {

return flow;

}

/**

* Returns the endpoint of the edge that is different from the given vertex (unless the edge

* represents a self-loop in which case it returns the same vertex).

*/

public int other(int vertex) {

if (vertex == v)

return w;

else if (vertex == w)

return v;

else

throw new IllegalArgumentException("invalid endpoint");

}

/**

* Returns the residual capacity of the edge in the direction to the given {@code vertex}.

*/

public double residualCapacityTo(int vertex) {

if (vertex == v)

return flow; // backward edge

else if (vertex == w)

return capacity - flow; // forward edge

else

throw new IllegalArgumentException("invalid endpoint");

}

/**

* Increases the flow on the edge in the direction to the given vertex. If {@code vertex} is

* the tail vertex, this increases the flow on the edge by {@code delta}; if {@code vertex}

* is the head vertex, this decreases the flow on the edge by {@code delta}.

*/

public void addResidualFlowTo(int vertex, double delta) {

if (!(delta >= 0.0))

throw new IllegalArgumentException("Delta must be nonnegative");

if (vertex == v)

flow -= delta; // backward edge

else if (vertex == w)

flow += delta; // forward edge

else

throw new IllegalArgumentException("invalid endpoint");

// round flow to 0 or capacity if within floating-point precision

if (Math.abs(flow) <= FLOATING_POINT_EPSILON)

flow = 0;

if (Math.abs(flow - capacity) <= FLOATING_POINT_EPSILON)

flow = capacity;

if (!(flow >= 0.0))

throw new IllegalArgumentException("Flow is negative");

if (!(flow <= capacity))

throw new IllegalArgumentException("Flow exceeds capacity");

}

}

}

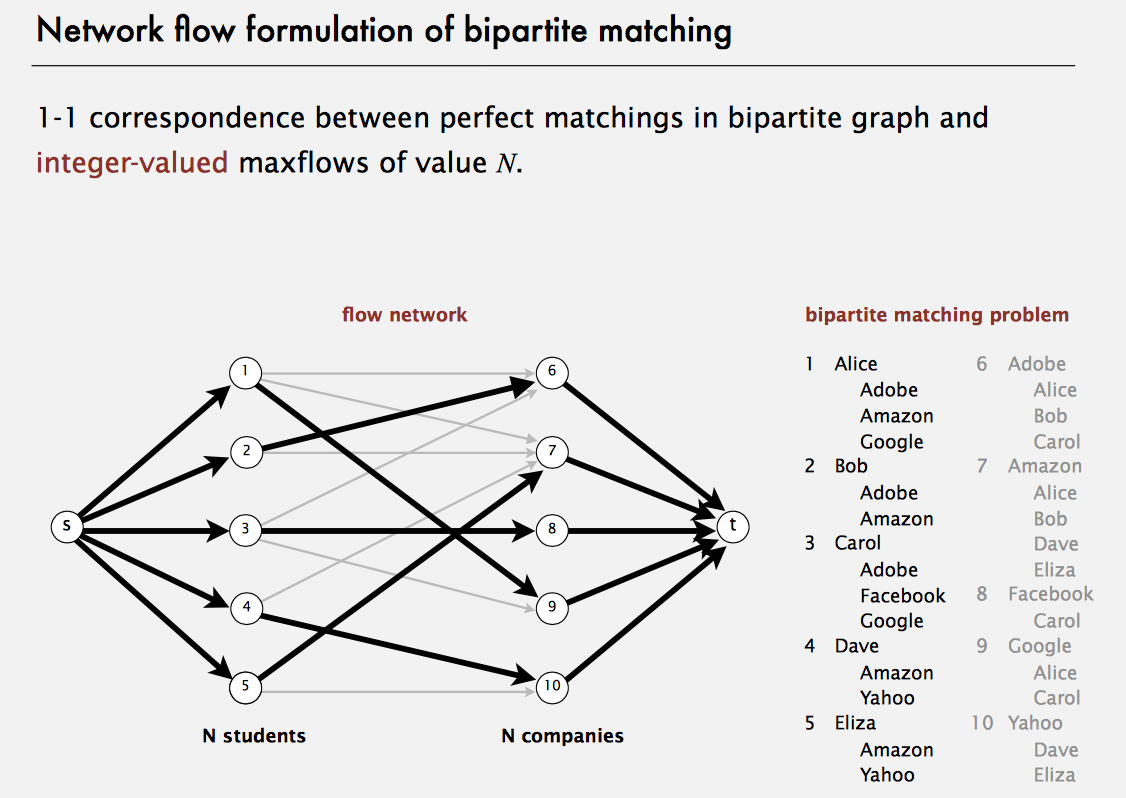

Bipartite Matching

A bipartite graph in a graph whose vertices can be partitioned into two disjoint sets such that every edge has one endpoint in either set. A perfect matching is a matching which matches all vertices in the graph.

This implementation uses the alternating path algorithm. It is equivalent to reducing to the maximum flow problem and running the augmenting path algorithm on the resulting flow network. The worst case is O((E+V)V)

Network flow formulation of bipartite matching:

- Create s, t, one vertex for each student, and one vertex for each job.

- Add edge from s to each student (capacity 1).

- Add edge from each job to t (capacity 1).

- Add edge from student to each job offered (infinity capacity).

public class BipartiteMatching {

private static final int UNMATCHED = -1;

private final int V; // number of vertices in the graph

private BipartiteX bipartition; // the bipartition

private int cardinality; // cardinality of current matching

private int[] mate; // mate[v] = w if v-w is an edge in current matching, = -1 if v is not in current matching

private boolean[] inMinVertexCover; // inMinVertexCover[v] = true if v is in min vertex cover

private boolean[] marked; // marked[v] = true if v is reachable via alternating path

private int[] edgeTo; // edgeTo[v] = w if v-w is last edge on path to w

/**

* Determines a maximum matching (and a minimum vertex cover) in a bipartite graph.

*/

public BipartiteMatching(Graph G) {

bipartition = new BipartiteX(G);

if (!bipartition.isBipartite()) {

throw new IllegalArgumentException("graph is not bipartite");

}

this.V = G.V();

// initialize empty matching

mate = new int[V];

for (int v = 0; v < V; v++)

mate[v] = UNMATCHED;

// alternating path algorithm

while (hasAugmentingPath(G)) {

// find one endpoint t in alternating path

int t = -1;

for (int v = 0; v < G.V(); v++) {

if (!isMatched(v) && edgeTo[v] != -1) {

t = v;

break;

}

}

// update the matching according to alternating path in edgeTo[] array

for (int v = t; v != -1; v = edgeTo[edgeTo[v]]) {

int w = edgeTo[v];

mate[v] = w;

mate[w] = v;

}

cardinality++;

}

// find min vertex cover from marked[] array

inMinVertexCover = new boolean[V];

for (int v = 0; v < V; v++) {

if (bipartition.color(v) && !marked[v])

inMinVertexCover[v] = true;

if (!bipartition.color(v) && marked[v])

inMinVertexCover[v] = true;

}

}

/*

* is there an augmenting path?

* - if so, upon termination adj[] contains the level graph;

* - if not, upon termination marked[] specifies those vertices reachable via an alternating

* path from one side of the bipartition

*

* an alternating path is a path whose edges belong alternately to the matching and not

* to the matching

*

* an augmenting path is an alternating path that starts and ends at unmatched vertices

*

* this implementation finds a shortest augmenting path (fewest number of edges), though there

* is no particular advantage to do so here

*/

private boolean hasAugmentingPath(Graph G) {

marked = new boolean[V];

edgeTo = new int[V];

for (int v = 0; v < V; v++)

edgeTo[v] = -1;

// breadth-first search (starting from all unmatched vertices on one side of bipartition)

Queue<Integer> queue = new LinkedList<Integer>();

for (int v = 0; v < V; v++) {

if (bipartition.color(v) && !isMatched(v)) {

queue.offer(v);

marked[v] = true;

}

}

// run BFS, stopping as soon as an alternating path is found

while (!queue.isEmpty()) {

int v = queue.poll();

for (int w : G.adj(v)) {

// either (1) forward edge not in matching or (2) backward edge in matching

if (isResidualGraphEdge(v, w) && !marked[w]) {

edgeTo[w] = v;

marked[w] = true;

if (!isMatched(w))

return true;

queue.offer(w);

}

}

}

return false;

}

// is the edge v-w a forward edge not in the matching or a reverse edge in the matching?

private boolean isResidualGraphEdge(int v, int w) {

if ((mate[v] != w) && bipartition.color(v))

return true;

if ((mate[v] == w) && !bipartition.color(v))

return true;

return false;

}

/**

* Returns the vertex to which the specified vertex is matched in the maximum matching computed

* by the algorithm.

*/

public int mate(int v) {

validate(v);

return mate[v];

}

/**

* Returns true if the specified vertex is matched in the maximum matching computed by the

* algorithm.

*/

public boolean isMatched(int v) {

validate(v);

return mate[v] != UNMATCHED;

}

/**

* Returns the number of edges in a maximum matching.

*/

public int size() {

return cardinality;

}

/**

* Returns true if the graph contains a perfect matching. That is, the number of edges in a

* maximum matching is equal to one half of the number of vertices in the graph (so that every

* vertex is matched).

*/

public boolean isPerfect() {

return cardinality * 2 == V;

}

/**

* Returns true if the specified vertex is in the minimum vertex cover computed by the

* algorithm.

*/

public boolean inMinVertexCover(int v) {

validate(v);

return inMinVertexCover[v];

}

private void validate(int v) {

if (v < 0 || v >= V)

throw new IllegalArgumentException("vertex " + v + " is not between 0 and " + (V - 1));

}

class BipartiteX {

private static final boolean WHITE = false;

private boolean isBipartite; // is the graph bipartite?

private boolean[] color; // color[v] gives vertices on one side of bipartition

private boolean[] marked; // marked[v] = true if v has been visited in DFS

private int[] edgeTo; // edgeTo[v] = last edge on path to v

private Queue<Integer> cycle; // odd-length cycle

/**

* Determines whether an undirected graph is bipartite and finds either a bipartition or an

* odd-length cycle.

*/

public BipartiteX(Graph G) {

isBipartite = true;

color = new boolean[G.V()];

marked = new boolean[G.V()];

edgeTo = new int[G.V()];

for (int v = 0; v < G.V() && isBipartite; v++) {

if (!marked[v]) {

bfs(G, v);

}

}

assert check(G);

}

private void bfs(Graph G, int s) {

Queue<Integer> q = new LinkedList<>();

color[s] = WHITE;

marked[s] = true;

q.offer(s);

while (!q.isEmpty()) {

int v = q.poll();

for (int w : G.adj(v)) {

if (!marked[w]) {

marked[w] = true;

edgeTo[w] = v;

color[w] = !color[v];

q.offer(w);

} else if (color[w] == color[v]) {

isBipartite = false;

// to form odd cycle, consider s-v path and s-w path

// and let x be closest node to v and w common to two paths

// then (w-x path) + (x-v path) + (edge v-w) is an odd-length cycle

// Note: distTo[v] == distTo[w];

cycle = new LinkedList<Integer>();

Stack<Integer> stack = new Stack<Integer>();

int x = v, y = w;

while (x != y) {

stack.push(x);

cycle.offer(y);

x = edgeTo[x];

y = edgeTo[y];

}

stack.push(x);

while (!stack.isEmpty())

cycle.offer(stack.pop());

cycle.offer(w);

return;

}

}

}

}

/**

* Returns true if the graph is bipartite.

*/

public boolean isBipartite() {

return isBipartite;

}

/**

* Returns the side of the bipartite that vertex {@code v} is on.

*/

public boolean color(int v) {

validateVertex(v);

if (!isBipartite)

throw new UnsupportedOperationException("Graph is not bipartite");

return color[v];

}

/**

* Returns an odd-length cycle if the graph is not bipartite, and {@code null} otherwise.

*/

public Iterable<Integer> oddCycle() {

return cycle;

}

private boolean check(Graph G) {

// graph is bipartite

if (isBipartite) {

for (int v = 0; v < G.V(); v++) {

for (int w : G.adj(v)) {

if (color[v] == color[w]) {

System.err.printf("edge %d-%d with %d and %d in same side of bipartition\n", v, w, v, w);

return false;

}

}

}

}

// graph has an odd-length cycle

else {

// verify cycle

int first = -1, last = -1;

for (int v : oddCycle()) {

if (first == -1)

Val first = v;

last = v;

}

if (first != last) {

System.err.printf("cycle begins with %d and ends with %d\n", first, last);

return false;

}

}

return true;

}

// throw an IllegalArgumentException unless {@code 0 <= v < V}

private void validateVertex(int v) {

int V = marked.length;

if (v < 0 || v >= V)

throw new IllegalArgumentException("vertex " + v + " is not between 0 and " + (V - 1));

}

}

}

Graph Boot Camp

Flip A Boolean Matrix

Implement a routine that takes an n x m boolean array A together with an entry (x, y) and flips the color of the region associated with (x, y).

Let’s implement with DFS and BFS

// Deep-first Search

public static void flipColorDFS(List<List<Boolean>> A, int x, int y) {

final int[][] DIRS = new int[][] { { 0, 1 }, { 0, -1 }, { 1, 0 }, { -1, 0 } };

boolean color = A.get(x).get(y);

A.get(x).set(y, !color); // flip

for (int[] dir : DIRS) {

int nextX = x + dir[0], nextY = y + dir[1];

if (nextX >= 0 && nextX < A.size() && nextY >= 0 && nextY < A.get(nextX).size()

&& A.get(nextX).get(nextY) == color) {

flipColorDFS(A, nextX, nextY);

}

}

}

// Bread-first Search

public static void flipColorBFS(List<List<Boolean>> A, int x, int y) {

final int[][] DIRS = { { 0, 1 }, { 0, -1 }, { 1, 0 }, { -1, 0 } };

boolean color = A.get(x).get(y);

Queue<Point> queue = new LinkedList<>();

A.get(x).set(y, !A.get(x).get(y)); // flip

queue.add(new Point(x, y));

while (!queue.isEmpty()) {

Point curr = queue.element();

for (int[] dir : DIRS) {

Point next = new Point(curr.x + dir[0], curr.y + dir[1]);

if (next.x >= 0 && next.x < A.size() && next.y >= 0 && next.y < A.get(x).size()

&& A.get(next.x).get(next.y) == color) {

A.get(next.x).set(next.y, !color);

queue.add(next);

}

}

}

queue.remove();

}

Fill Enclosed Regions

Let A be a 2D array whose entries are either W or B, write a program that takes A, and replaces all Ws that can not reach the boundary with a B.

It is easier to focus on the inverse problem. Staring from outside and mark all the Ws (including neighbors) that can reach the boundary. Finally update other Ws.

public static void fillEnclosedRegions(List<List<Character>> board) {

// starting from first or last columns

for (int i = 0; i < board.size(); i++) {

if (board.get(i).get(0) == 'W')

markBoundaryRegion(i, 0, board);

if (board.get(i).get(board.get(i).size() - 1) == 'W')

markBoundaryRegion(i, board.get(i).size() - 1, board);

}

// starting from first or last rows

for (int j = 0; j < board.get(0).size(); j++) {

if (board.get(0).get(j) == 'W')

markBoundaryRegion(0, j, board);

if (board.get(board.size() - 1).get(j) == 'W')

markBoundaryRegion(board.size() - 1, j, board);

}

// marks the enclosed white regions as black

for (int i = 0; i < board.size(); i++) {

for (int j = 0; j < board.size(); j++) {

board.get(i).set(j, board.get(i).get(j) != 'T' ? 'B' : 'W');

}

}

}

private static void markBoundaryRegion(int i, int j, List<List<Character>> board) {

Queue<Point> queue = new LinkedList<>();

queue.add(new Point(i, j));

while (!queue.isEmpty()) {

Point curr = queue.poll();

if (curr.x >= 0 && curr.x < board.size() && curr.y >= 0 && curr.y < board.get(curr.x).size()

&& board.get(curr.x).get(curr.y) == 'W') {

board.get(curr.x).set(curr.y, 'T');

queue.add(new Point(curr.x - 1, curr.y));

queue.add(new Point(curr.x + 1, curr.y));

queue.add(new Point(curr.x, curr.y + 1));

queue.add(new Point(curr.x, curr.y - 1));

}

}

}

Kill Process

Given n processes, each process has a unique PID (process id) and its PPID (parent process id).

Each process only has one parent process, but may have one or more children processes. This is just like a tree structure. Only one process has PPID that is 0, which means this process has no parent process. All the PIDs will be distinct positive integers.

We use two list of integers to represent a list of processes, where the first list contains PID for each process and the second list contains the corresponding PPID.

Now given the two lists, and a PID representing a process you want to kill, return a list of PIDs of processes that will be killed in the end. You should assume that when a process is killed, all its children processes will be killed. No order is required for the final answer.

Example 1:

Input:

pid = [1, 3, 10, 5]

ppid = [3, 0, 5, 3]

kill = 5

Output: [5,10]

Explanation:

3

/ \

1 5

/

10

Kill 5 will also kill 10.

HashMap + Breadth First Search or Depth First Search, Both O(n) complexity.

public static List<Integer> killProcess(List<Integer> pid, List<Integer> ppid, int kill) {

List<Integer> list = new ArrayList<>();

Map<Integer, List<Integer>> graph = new HashMap<>();

for (int i = 0; i < ppid.size(); i++) {

if (ppid.get(i) > 0) {

graph.putIfAbsent(ppid.get(i), new ArrayList<>());

graph.get(ppid.get(i)).add(pid.get(i));

}

}

// killProcessDfs(graph, kill, list);

killProcessBfs(graph, kill, list);

return list;

}

private static void killProcessDfs(Map<Integer, List<Integer>> graph, int kill, List<Integer> list) {

list.add(kill);

if (graph.containsKey(kill)) {

for (int next : graph.get(kill)) {

killProcessDfs(graph, next, list);

}

}

}

private static void killProcessBfs(Map<Integer, List<Integer>> graph, int kill, List<Integer> list) {

Queue<Integer> queue = new ArrayDeque<>();

queue.offer(kill);

while (!queue.isEmpty()) {

int id = queue.poll();

list.add(id);

if (graph.containsKey(id)) {

for (int next : graph.get(kill)) {

queue.offer(next);

}

}

}

}

Deadlock Detection

One deadlock detection algorithm makes use of a “wait-for” graph: Processes are represented as nodes, and an edge from process P to Q implies P is waiting for Q to release its lock on the resource. A cycle in this graph implies the possibility of a deadlock.

Write a program that takes as input a directed graph and checks if the graph contains a cycle.

We can check for the existence of a cycle in graph by running DFS with maintaining a set of status. As soon as we discover an edge from a visiting vertex to a visiting vertex, a cycle exists in graph and we can stop.

The time complexity of DFS is O(V+E): we iterate over all vertices, and spend a constant amount of time per edge. The space complexity is O(V), which is the maximum stack depth.

public static boolean isDeadlocked(List<Vertex> graph) {

for (Vertex vertex : graph) {

if (vertex.state == State.UNVISITED && hasCycle(vertex)) {

return true;

}

}

return false;

}

private static boolean hasCycle(Vertex current) {

if (current.state == State.VISITING) {

return true;

}

current.state = State.VISITING;

for (Vertex next : current.edges) {

if (next.state != State.VISITED && hasCycle(next)) {

// edgeTo[next] = current; // if we need to track path

return true;

}

}

current.state = State.VISITED;

return false;

}

In a directed graph, we start at some node and every turn, walk along a directed edge of the graph. If we reach a node that is terminal, We say our starting node is eventually safe.

// This is a classic "white-gray-black" DFS algorithm

public List<Integer> eventualSafeNodes(int[][] graph) {

int N = graph.length;

int[] color = new int[N];

List<Integer> ans = new ArrayList<>();

for (int i = 0; i < N; i++) {

if (hasNoCycle(i, color, graph))

ans.add(i);

}

return ans;

}

// colors: WHITE 0, GRAY 1, BLACK 2;

private boolean hasNoCycle(int node, int[] color, int[][] graph) {

if (color[node] > 0)

return color[node] == 2;

color[node] = 1;

for (int nei : graph[node]) {

if (color[nei] == 2)

continue;

if (color[nei] == 1 || !hasNoCycle(nei, color, graph))

return false;

}

color[node] = 2;

return true;

}

Clone A Graph

Design an algorithm that takes a reference to a vertex u, and creates a copy of the graph on the vertices reachable from u. Return the copy of u.

We recognize new vertices by maintaining a hash table mapping vertices in the original graph to their counterparts in the new graph.

public static Vertex cloneGraph(Vertex graph) {

if (graph == null)

return null;

Map<Vertex, Vertex> map = new HashMap<>();

Queue<Vertex> queue = new LinkedList<>();

queue.add(graph);

map.put(graph, new Vertex(graph.id));

while (!queue.isEmpty()) {

Vertex v = queue.remove();

for (Vertex e : v.edges) {

if (!map.containsKey(e)) {

map.put(e, new Vertex(e.id));

queue.add(e);

}

map.get(v).edges.add(map.get(e));

}

}

return map.get(graph);

}

Word Ladder

Given two words (beginWord and endWord), and a dictionary’s word list, find the length of shortest transformation sequence from beginWord to endWord, such that:

Only one letter can be changed at a time. Each transformed word must exist in the word list. Note that beginWord is not a transformed word.

For example, Given:

beginWord = "hit"

endWord = "cog"

wordList = ["hot","dot","dog","lot","log","cog"]

As one shortest transformation is "hit" -> "hot" -> "dot" -> "dog" -> "cog",

return its length 5.

A transformation sequence is simply a path in Graph, so what we need is a shortest path from beginWord to endWord, by using two-end BFS, we can reduce the time complexity from O(n^k) -> O(2n^(k/2)), also use Trie in place of Set to break the loop as earlier as possible.

public class WordLadder {

// Two-end BFS, O(n^k) -> O(2n^(k/2))

public int ladderLength(String beginWord, String endWord, List<String> wordList) {

Set<String> wordSet = new HashSet<>(wordList);

// confirm if word list must contain end word!

if (!wordSet.contains(endWord))

return 0;

int length = 1;

Set<String> beginSet = new HashSet<>();

Set<String> endSet = new HashSet<>();

beginSet.add(beginWord);

endSet.add(endWord);

while (!beginSet.isEmpty() && !endSet.isEmpty()) {

// always choose the smaller end

if (beginSet.size() > endSet.size()) {

Set<String> temp = beginSet;

beginSet = endSet;

endSet = temp;

}

Set<String> newSet = new HashSet<String>();

for (String word : beginSet) {

char[] chrs = word.toCharArray();

for (int i = 0; i < chrs.length; i++) {

char temp = chrs[i];

for (char c = 'a'; c <= 'z'; c++) {

chrs[i] = c;

String target = String.valueOf(chrs);

if (endSet.contains(target))

return length + 1;

if (wordSet.contains(target)) {

newSet.add(target);

wordSet.remove(target);

}

}

chrs[i] = temp;

}

}

beginSet = newSet;

length++;

}

return 0;

}

// Use Set O(n^k)

public int ladderLength2(String beginWord, String endWord, List<String> wordList) {

Set<String> set = new HashSet<>(wordList);

Queue<Ladder> queue = new LinkedList<>();

queue.offer(new Ladder(beginWord, 1));

while (!queue.isEmpty()) {

Ladder ladder = queue.poll();

char[] chrs = ladder.word.toCharArray();

for (int i = 0; i < chrs.length; i++) {

char temp = chrs[i];

for (char j = 'a'; j <= 'z'; j++) {

chrs[i] = j;

String target = new String(chrs);

if (set.contains(target)) {

if (target.equals(endWord))

return ladder.depth + 1;

queue.offer(new Ladder(target, ladder.depth + 1));

set.remove(target); // only use it once!

}

}

chrs[i] = temp;

}

}

return 0;

}

// Use Trie O(n^k), break loop as earlier as possible!

public int ladderLength3(String beginWord, String endWord, List<String> wordList) {

TrieNode trie = buildTrieTree(wordList);

Queue<Ladder> queue = new LinkedList<>();

queue.offer(new Ladder(beginWord, 1));

while (!queue.isEmpty()) {

Ladder ladder = queue.poll();

char[] chrs = ladder.word.toCharArray();

TrieNode node = trie;

for (int i = 0; node != null && i < chrs.length; i++) {

char temp = chrs[i];

for (char j = 'a'; j <= 'z'; j++) {

chrs[i] = j;

if (searchAndMark(node, chrs, i)) {

String target = new String(chrs);

if (target.equals(endWord))

return ladder.depth + 1;

queue.offer(new Ladder(target, ladder.depth + 1));

}

}

chrs[i] = temp;

node = node.next[temp - 'a'];

}

}

return 0;

}

private TrieNode buildTrieTree(List<String> words) {

TrieNode root = new TrieNode();

for (String word : words) {

TrieNode node = root;

for (int i = 0; i < word.length(); i++) {

int j = word.charAt(i) - 'a';

if (node.next[j] == null)

node.next[j] = new TrieNode();

node = node.next[j];

}

node.isWord = true;

}

return root;

}

private boolean searchAndMark(TrieNode node, char[] word, int start) {

for (int i = start; i < word.length; i++) {

node = node.next[word[i] - 'a'];

if (node == null)

return false;

}

boolean isWord = node.isWord;

node.isWord = false;

return isWord;

}

private class Ladder {

String word;

int depth;

Ladder(String word, int deep) {

this.word = word;

this.depth = deep;

}

}

class TrieNode {

boolean isWord = false;

TrieNode[] next = new TrieNode[26];

}

public static void main(String[] args) {

WordLadder solution = new WordLadder();

List<String> wordList = new ArrayList<>();

wordList.addAll(Arrays.asList("hot", "dot", "dog", "lot", "log", "cog"));

int steps = solution.ladderLength("hit", "cog", wordList);

System.out.println(steps);

assert steps == 5;

}

}

Word Ladder II

The same as above, find all shortest transformation sequence(s) from beginWord to endWord

Use Two-end recursion or iteration BFS, achieve the complexity O(2n^(k/2)).

// BFS

public List<List<String>> findLadders(String beginWord, String endWord, List<String> wordList) {

List<List<String>> ladders = new ArrayList<>();

Set<String> wordSet = new HashSet<>(wordList);

if (!wordSet.contains(endWord))

return ladders;

// build the DAG graph using bidirectional BFS

Map<String, List<String>> graph = new HashMap<String, List<String>>();

if (!buildDAGbyBFS(beginWord, endWord, wordSet, graph)) {

return ladders;

}

// generate ladders by traversing the DAG graph

List<String> list = new ArrayList<>();

list.add(beginWord);

generateLadders(beginWord, endWord, graph, list, ladders);

return ladders;

}

private boolean buildDAGbyBFS(String beginWord, String endWord, Set<String> wordSet, Map<String, List<String>> graph) {

if (wordSet.contains(beginWord)) {

wordSet.remove(beginWord);

}

Set<String> beginSet = new HashSet<String>();

Set<String> endSet = new HashSet<String>();

beginSet.add(beginWord);

endSet.add(endWord);

boolean found = false;

boolean isForward = true;

while (!beginSet.isEmpty() && !endSet.isEmpty()) {

Set<String> newSet = new HashSet<String>();

// always choose the smaller set as begin set

if (beginSet.size() > endSet.size()) {

Set<String> temp = beginSet;

beginSet = endSet;

endSet = temp;

isForward = !isForward;

}

// clean up previous words

wordSet.removeAll(beginSet);

for (String word : beginSet) {

char[] chrs = word.toCharArray();

for (int i = 0; i < word.length(); i++) {

char old = chrs[i];

for (char c = 'a'; c <= 'z'; c++) {

chrs[i] = c;

if (old == c) {

continue;

}

String next = new String(chrs);

if (!wordSet.contains(next))

continue;

newSet.add(next);

String key = isForward ? word : next;

String value = isForward ? next : word;

graph.computeIfAbsent(key, k -> new ArrayList<>()).add(value);

if (endSet.contains(next))

found = true;

}

// backtrack

chrs[i] = old;

}

}

beginSet = newSet;

if (found) {

break;

}

}

return found;

}

// DFS

public List<List<String>> findLadders2(String beginWord, String endWord, List<String> wordList) {

List<List<String>> ladders = new ArrayList<>();

Set<String> wordSet = new HashSet<>(wordList);

if (!wordSet.contains(endWord))

return ladders;

Map<String, List<String>> graph = new HashMap<>(wordList.size());

Set<String> beginSet = new HashSet<>();

beginSet.add(beginWord);

Set<String> endSet = new HashSet<>();

endSet.add(endWord);

// build the DAG graph using bidirectional DFS

if (!buildDAGbyDFS(wordSet, beginSet, endSet, graph, true))

return ladders;

// generate ladders by traversing the DAG graph

List<String> list = new ArrayList<>();

list.add(beginWord);

generateLadders(beginWord, endWord, graph, list, ladders);

return ladders;

}

private boolean buildDAGbyDFS(Set<String> wordSet, Set<String> beginSet, Set<String> endSet, Map<String, List<String>> graph, boolean isForward) {

if (beginSet.isEmpty() || endSet.isEmpty())

return false;

wordSet.removeAll(beginSet);

boolean found = false;

Set<String> newSet = new HashSet<>();

for (String word : beginSet) {

char[] chrs = word.toCharArray();

for (int i = 0; i < chrs.length; i++) {

char old = chrs[i];

for (char c = 'a'; c <= 'z'; c++) {

if (old == c)

continue;

chrs[i] = c;

String next = new String(chrs);

if (!wordSet.contains(next))

continue;

newSet.add(next);

String key = isForward ? word : next;

String value = isForward ? next : word;

graph.computeIfAbsent(key, k -> new ArrayList<>()).add(value);

if (endSet.contains(next))

found = true;

}

// backtrack

chrs[i] = old;

}

}

if (found)

return true;

if (newSet.size() > endSet.size())

return buildDAGbyDFS(wordSet, endSet, newSet, graph, !isForward);

return buildDAGbyDFS(wordSet, newSet, endSet, graph, isForward);

}

private void generateLadders(String beginWord, String endWord, Map<String, List<String>> graph, List<String> path, List<List<String>> result) {

if (beginWord.equals(endWord)) {

result.add(new ArrayList<>(path));

} else if (graph.containsKey(beginWord)) {

for (String word : graph.get(beginWord)) {

path.add(word);

generateLadders(word, endWord, graph, path, result);

path.remove(path.size() - 1);

}

}

}

Can Tile

Small tile is 1 unit, Big tile is 5 units, given number of small tiles and big tiles, check whether we can tile the target.

public class Tile {

public static boolean canTile(int small, int big, int target) {

// return small >= (big * 5 > target ? target % 5 : target - big * 5);

return small >= (target / 5 > big ? target - big * 5 : target % 5);

}

public static boolean canTile2(int small, int big, int target) {

if (target == 0)

return true;

if (target < 0)

return false;

if (small == 0 && big == 0)

return false;

return (small > 0 && canTile2(small - 1, big, target - 1)) || (big > 0 && canTile2(small, big - 1, target - 5));

}

public static void main(String[] args) {

assert canTile(3, 4, 23) == canTile2(3, 4, 23);

assert canTile(3, 4, 24) == canTile2(3, 4, 24);

}

}

Sliding Puzzle

On a 2x3 board, there are 5 tiles represented by the integers 1 through 5, and an empty square represented by 0. A move consists of choosing 0 and a 4-directionally adjacent number and swapping it. The state of the board is solved if and only if the board is [[1,2,3],[4,5,0]].

Given a puzzle board, return the least number of moves required so that the state of the board is solved. If it is impossible for the state of the board to be solved, return -1.

Input: board = [[4,1,2],[5,0,3]]

Output: 5

Explanation: 5 is the smallest number of moves that solves the board.

An example path:

After move 0: [[4,1,2],[5,0,3]]

After move 1: [[4,1,2],[0,5,3]]

After move 2: [[0,1,2],[4,5,3]]

After move 3: [[1,0,2],[4,5,3]]

After move 4: [[1,2,0],[4,5,3]]

After move 5: [[1,2,3],[4,5,0]]

Think of this problem as a shortest path problem on a graph. Each node is a different board state, and we connect two boards by an edge if they can transformed into one another in one move. We can solve shortest path problems with breadth first search. There are (R*C)! possible board states, so the time and space complexity are both O(R*C*(R*C)!).

public int slidingPuzzle(int[][] board) {

int r = board.length, c = board[0].length;

int sr = 0, sc = 0;

search: for (sr = 0; sr < r; sr++)

for (sc = 0; sc < c; sc++)

if (board[sr][sc] == 0)

break search;

int[][] dirs = new int[][] { { 1, 0 }, { -1, 0 }, { 0, 1 }, { 0, -1 } };

Queue<Node> queue = new ArrayDeque<>();

Node start = new Node(board, sr, sc, 0);

queue.add(start);

Set<String> visited = new HashSet<>();

visited.add(start.boardHash);

String target = Arrays.deepToString(new int[][] { { 1, 2, 3 }, { 4, 5, 0 } });

while (!queue.isEmpty()) {

Node node = queue.remove();

if (node.boardHash.equals(target))

return node.depth;

for (int[] dir : dirs) {

int neiR = dir[0] + node.zeroR;

int neiC = dir[1] + node.zeroC;

if (neiR < 0 || neiR >= r || neiC < 0 || neiC >= c)

continue;

int[][] newBoard = new int[r][c];

int t = 0;

for (int[] row : node.curBoard)

newBoard[t++] = row.clone();

newBoard[node.zeroR][node.zeroC] = newBoard[neiR][neiC];

newBoard[neiR][neiC] = 0;

Node nei = new Node(newBoard, neiR, neiC, node.depth + 1);

if (visited.contains(nei.boardHash))

continue;

queue.add(nei);

visited.add(nei.boardHash);

}

}

return -1;

}

class Node {

int[][] curBoard;

String boardHash;

int zeroR;

int zeroC;

int depth;

Node(int[][] curBoard, int zeroR, int zeorC, int depth) {

this.curBoard = curBoard;

this.zeroR = zeroR;

this.zeroC = zeorC;

this.depth = depth;

this.boardHash = Arrays.deepToString(curBoard);

}

}

Bus Routes

We have a list of bus routes. Each routes[i] is a bus route that the i-th bus repeats forever. For example if routes[0] = [1, 5, 7], this means that the first bus (0-th indexed) travels in the sequence 1->5->7->1->5->7->1->… forever. We start at bus stop S (initially not on a bus), and we want to go to bus stop T. Travelling by buses only, what is the least number of buses we must take to reach our destination? Return -1 if it is not possible.

Solution: Instead of thinking of the stops as vertex (of a graph), think of the buses as nodes. We want to take the least number of buses, which is a shortest path problem, conducive to using a breadth-first search.

public int numBusesToDestination(int[][] routes, int S, int T) {

if (S == T)

return 0;

int N = routes.length;

List<List<Integer>> graph = new ArrayList<>(N);

for (int i = 0; i < N; i++) {

Arrays.sort(routes[i]);

graph.add(new ArrayList<>());

}

// two buses are connected if they share at least one bus stop.

for (int i = 0; i < N - 1; i++) {

for (int j = i + 1; j < N; j++) {

if (intersect(routes[i], routes[j])) {

graph.get(i).add(j);

graph.get(j).add(i);

}

}

}

// breadth first search with queue

Set<Integer> visited = new HashSet<>();

Set<Integer> targets = new HashSet<>();

Queue<Vertex> queue = new ArrayDeque<>();

for (int i = 0; i < N; i++) {

if (Arrays.binarySearch(routes[i], S) >= 0) {

queue.offer(new Vertex(i, 0));

visited.add(i);

}

if (Arrays.binarySearch(routes[i], T) >= 0)

targets.add(i);

}

while (!queue.isEmpty()) {

Vertex vertex = queue.poll();

if (targets.contains(vertex.id))

return vertex.depth + 1;

for (Integer neighbor : graph.get(vertex.id)) {

if (!visited.contains(neighbor)) {

queue.offer(new Vertex(neighbor, vertex.depth + 1));

visited.add(neighbor);

}

}

}

return -1;

}

private boolean intersect(int[] routeA, int[] routeB) {

int i = 0, j = 0;

while (i < routeA.length && j < routeB.length) {

if (routeA[i] == routeB[j])

return true;

else if (routeA[i] < routeB[j])

i++;

else

j++;

}

return false;

}

public static void main(String[] args) {

BusRoutes solution = new BusRoutes();

int[][] routes = { { 1, 2, 7 }, { 3, 6, 7 }, { 6, 4, 5 } };

assert solution.numBusesToDestination(routes, 1, 5) == 3;

}

Topological Ordering

A topological ordering of a directed acyclic graph (DAG): every edge goes from earlier in the ordering (upper left) to later in the ordering (lower right). A directed graph is acyclic if and only if it has a topological ordering.

DAGs can be used to represent compilation operations, dataflow programming, events and their influence, family tree, version control, compact sequence data, binary decision diagram etc.

Let us practice it with the Course Schedule problem:

There are a total of n courses you have to take, labeled from 0 to n - 1.

Some courses may have prerequisites, for example to take course 0 you have to first take course 1, which is expressed as a pair: [0,1]

Given the total number of courses and a list of prerequisite pairs, return the ordering of courses you should take to finish all courses.

There may be multiple correct orders, you just need to return one of them. If it is impossible to finish all courses, return an empty array.

For example:

2, [[1,0]]

There are a total of 2 courses to take. To take course 1 you should have finished course 0. So the correct course order is [0,1]

4, [[1,0],[2,0],[3,1],[3,2]]

There are a total of 4 courses to take. To take course 3 you should have finished both courses 1 and 2. Both courses 1 and 2 should be taken after you finished course 0. So one correct course order is [0,1,2,3]. Another correct ordering is[0,2,1,3].

The problem turns out to find a topological sort order of the courses, which would be a DAG if it has a valid order. try BFS and DFS to resolve it! O(E+V)

public int[] findOrderInBFS(int numCourses, int[][] prerequisites) {

// initialize directed graph

int[] indegrees = new int[numCourses];

List<List<Integer>> adjacents = new ArrayList<>(numCourses);

for (int i = 0; i < numCourses; i++) {

adjacents.add(new ArrayList<>());

}

for (int[] edge : prerequisites) {

indegrees[edge[0]]++;

adjacents.get(edge[1]).add(edge[0]);

}

// breadth first search

int[] order = new int[numCourses];

Queue<Integer> toVisit = new ArrayDeque<>();

for (int i = 0; i < indegrees.length; i++) {

if (indegrees[i] == 0)

toVisit.offer(i);

}

int visited = 0;

while (!toVisit.isEmpty()) {

int from = toVisit.poll();

order[visited++] = from;

for (int to : adjacents.get(from)) {

indegrees[to]--;

if (indegrees[to] == 0)

toVisit.offer(to);

}

}

// should visited all courses

return visited == indegrees.length ? order : new int[0];

}

// track cycle with three states

public int[] findOrderInDFS(int numCourses, int[][] prerequisites) {

// initialize directed graph

List<List<Integer>> adjacents = new ArrayList<>(numCourses);

for (int i = 0; i < numCourses; i++) {

adjacents.add(new ArrayList<>());

}

for (int[] edge : prerequisites) {

adjacents.get(edge[1]).add(edge[0]);

}

int[] states = new int[numCourses]; // 0=unvisited, 1=visiting, 2=visited

Stack<Integer> stack = new Stack<>();

for (int from = 0; from < numCourses; from++) {

if (!topologicalSort(adjacents, from, stack, states))

return new int[0];

}

int i = 0;

int[] order = new int[numCourses];

while (!stack.isEmpty()) {

order[i++] = stack.pop();

}

return order;

}

private boolean topologicalSort(List<List<Integer>> adjacents, int from, Stack<Integer> stack, int[] states) {

if (states[from] == 1)

return false;

if (states[from] == 2)

return true;

states[from] = 1; // visiting

for (Integer to : adjacents.get(from)) {

if (!topologicalSort(adjacents, to, stack, states))

return false;

}

states[from] = 2; // visited

stack.push(from);

return true;

}

/**

* There are n different online courses numbered from 1 to n. You are given an array courses where

* courses[i] = [durationi, lastDayi] indicate that the ith course should be taken continuously for

* durationi days and must be finished before or on lastDayi.

*

* You will start on the 1st day and you cannot take two or more courses simultaneously.

*

* Return the maximum number of courses that you can take.

*

* Use priority queue can achieve time complexity: O(nlog(n)).

*/

public int scheduleCourseIII(int[][] courses) {

// It's always profitable to take the course with a smaller lastDay

Arrays.sort(courses, (a, b) -> a[1] - b[1]);

// It's also profitable to take the course with a smaller duration and replace the larger one.

Queue<int[]> queue = new PriorityQueue<>((a, b) -> b[0] - a[0]);

int daysUsed = 0;

for (int[] course : courses) {

if (daysUsed + course[0] <= course[1]) {

queue.offer(course);

daysUsed += course[0];

} else if (!queue.isEmpty() && queue.peek()[0] > course[0]) {

daysUsed += course[0] - queue.poll()[0];

queue.offer(course);

}

}

return queue.size();

}

/**

* There are a total of numCourses courses you have to take, labeled from 0 to numCourses - 1. You

* are given an array prerequisites where prerequisites[i] = [ai, bi] indicates that you must take

* course ai first if you want to take course bi.

*

* For example, the pair [0, 1] indicates that you have to take course 0 before you can take course

* 1. Prerequisites can also be indirect. If course a is a prerequisite of course b, and course b is

* a prerequisite of course c, then course a is a prerequisite of course c.

*

* You are also given an array queries where queries[j] = [uj, vj]. For the jth query, you should

* answer whether course uj is a prerequisite of course vj or not.

*

* Return a boolean array answer, where answer[j] is the answer to the jth query.

*/

public List<Boolean> scheduleCourseIV(int numCourses, int[][] prerequisites, int[][] queries) {

int[] indegrees = new int[numCourses];

List<List<Integer>> adjacents = new ArrayList<>(numCourses);

// Use BitSet to track the previous courses been taken

List<BitSet> previousCourses = new ArrayList<>(numCourses);

for (int i = 0; i < numCourses; i++) {

adjacents.add(new ArrayList<>());

previousCourses.add(new BitSet(numCourses));

}

for (int[] edge : prerequisites) {

indegrees[edge[1]]++;

adjacents.get(edge[0]).add(edge[1]);

}

Deque<Integer> toVisit = new LinkedList<>();

for (int i = 0; i < numCourses; i++) {

if (indegrees[i] == 0) {

toVisit.push(i);

}

}

while (!toVisit.isEmpty()) {

int index = toVisit.pop();

for (int v : adjacents.get(index)) {

previousCourses.get(v).set(index);

previousCourses.get(v).or(previousCourses.get(index));

indegrees[v]--;

if (indegrees[v] == 0) {

toVisit.push(v);

}

}

}

List<Boolean> result = new ArrayList<>(queries.length);

for (int[] query : queries) {

if (previousCourses.get(query[1]).get(query[0])) {

result.add(true);

} else {

result.add(false);

}

}

return result;

}

Alien Dictionary

There is a new alien language which uses the latin alphabet. However, the order among letters are unknown to you. You receive a list of non-empty words from the dictionary, where words are sorted lexicographically by the rules of this new language. Derive the order of letters in this language.

Example: Input: [ “wrt”, “wrf”, “er”, “ett”, “rftt”]; Output: “wertf”

This is another typical topological sorting problem.

public String alienOrder(String[] words) {

Map<Character, Set<Character>> map = new HashMap<>();

Map<Character, Integer> degree = new HashMap<>();

StringBuilder result = new StringBuilder();

for (String word : words) {

for (char c : word.toCharArray()) {

degree.put(c, 0);

}

}

for (int i = 0; i < words.length - 1; i++) {

String curr = words[i];

String next = words[i + 1];

for (int j = 0; j < Math.min(curr.length(), next.length()); j++) {

char c1 = curr.charAt(j);

char c2 = next.charAt(j);

if (c1 != c2) {

if (!map.containsKey(c1))

map.put(c1, new HashSet<>());

Set<Character> set = map.get(c1);

if (!set.contains(c2)) {

set.add(c2);

degree.put(c2, degree.getOrDefault(c2, 0) + 1);

}

break;

}

}

}

Queue<Character> queue = new LinkedList<>();

for (Map.Entry<Character, Integer> entry : degree.entrySet()) {

if (entry.getValue() == 0)

queue.add(entry.getKey());

}

while (!queue.isEmpty()) {

char c = queue.remove();

result.append(c);

if (map.containsKey(c)) {

for (char c2 : map.get(c)) {

degree.put(c2, degree.get(c2) - 1);

if (degree.get(c2) == 0)

queue.add(c2);

}

}

}

if (result.length() != degree.size())

result.setLength(0);

return result.toString();

}

Is Graph Bipartible?

Given an undirected graph, return true if and only if it is bipartite.

Recall that a graph is bipartite if we can split it’s set of nodes into two independent subsets A and B such that every edge in the graph has one node in A and another node in B.

The graph is given in the following form: graph[i] is a list of indexes j for which the edge between nodes i and j exists. Each node is an integer between 0 and graph.length - 1. There are no self edges or parallel edges: graph[i] does not contain i, and it doesn’t contain any element twice.

Example 1:

Input: [[1,3], [0,2], [1,3], [0,2]]

Output: true

Explanation:

The graph looks like this:

0----1

| |

| |

3----2

We can divide the vertices into two groups: {0, 2} and {1, 3}.

Solution: Coloring by Depth-First Search, Time Complexity O(N+E), We explore each node once when we transform it from uncolored to colored, traversing all its edges in the process.

public boolean isBipartite(int[][] graph) {

int n = graph.length;

int[] color = new int[n];

Arrays.fill(color, -1);

for (int start = 0; start < n; ++start) {

if (color[start] == -1) {

Queue<Integer> queue = new ArrayDeque<>();

queue.offer(start);

color[start] = 0;

while (!queue.isEmpty()) {

Integer node = queue.poll();

for (int nei : graph[node]) {

if (color[nei] == -1) {

queue.offer(nei);

color[nei] = color[node] ^ 1;

} else if (color[nei] == color[node]) {

return false;

}

}

}

}

}

return true;

}

public static boolean isBipartible(Map<String, Set<String>> graph) {

Map<String, Integer> color = new HashMap<>();

for (String node : graph.keySet()) {

color.put(node, -1);

}

for (String start : graph.keySet()) {

if (color.get(start) == -1) {

Stack<String> stack = new Stack<>();

stack.push(start);

// We can always set to 0 because it starts with a new tree, not depends on other trees!

color.put(start, 0);

while (!stack.empty()) {

String node = stack.pop();

for (String nei : graph.get(node)) {

if (color.get(nei) == -1) {

stack.push(nei);

color.put(nei, color.get(node) ^ 1);

} else if (color.get(nei) == color.get(node)) {

return false;

}

}

}

}

}

return true;

}

// Another similar question to bipartite dislikes persons/parties

public static boolean possibleBipartition(int N, int[][] dislikes) {

// construct graph

@SuppressWarnings("unchecked")

List<Integer>[] graph = new ArrayList[N + 1];

for (int i = 1; i <= N; ++i)

graph[i] = new ArrayList<>();

for (int[] edge : dislikes) {

graph[edge[0]].add(edge[1]);

graph[edge[1]].add(edge[0]);

}

// coloring bipartites

Integer[] coloring = new Integer[N + 1];

for (int start = 1; start <= N; start++) {

if (coloring[start] == null) {

// use BFS

/*

Queue<Integer> queue = new ArrayDeque<>();

queue.offer(start);

coloring[start] = 0;

while (!queue.isEmpty()) {

Integer node = queue.poll();

for (int nei : graph[node]) {

if (coloring[nei] == null) {

queue.offer(nei);

coloring[nei] = coloring[node] ^ 1;

} else if (coloring[nei].equals(coloring[node])) {

return false;

}

}

}

*/

// use DFS

if (!possibleBipartition(start, 0, graph, coloring))

return false;

}

}

return true;

}

public static boolean possibleBipartition(int node, int color, List<Integer>[] graph, Integer[] coloring) {

if (coloring[node] != null)

return coloring[node] == color;

coloring[node] = color;

for (int nei : graph[node])

if (!possibleBipartition(nei, color ^ 1, graph, coloring))

return false;

return true;

}

// The input can be Map<String, Collection<String>> dislikes

public boolean possibleBipartition(int N, int[][] dislikes) {

// construct graph

Map<Integer, Set<Integer>> graph = new HashMap<>();

for (int i = 1; i <= N; ++i)

graph.put(i, new HashSet<>());

for (int[] edge : dislikes) {

graph.get(edge[0]).add(edge[1]);

graph.get(edge[1]).add(edge[0]);

}

// coloring bipartites

Map<Integer, Integer> coloring = new HashMap<>();

for (int start : graph.keySet()) {

if (!coloring.containsKey(start)) {

// use BFS

Queue<Integer> queue = new ArrayDeque<>();

queue.offer(start);

coloring.put(start, 0);

while (!queue.isEmpty()) {

Integer node = queue.poll();

for (int nei : graph.get(node)) {

if (!coloring.containsKey(nei)) {

queue.offer(nei);

coloring.put(nei, coloring.get(node) ^ 1);

} else if (coloring.get(nei).equals(coloring.get(node))) {

return false;

}

}

}

// use DFS

/*

if (!possibleBipartitionDfs(start, 0, graph, coloring))

return false;

*/

}

}

return true;

}

public boolean possibleBipartitionDfs(Integer node, Integer color, Map<Integer, Set<Integer>> graph, Map<Integer, Integer> coloring) {

if (coloring.containsKey(node))

return coloring.get(node).equals(color);

coloring.put(node, color);

for (int nei : graph.get(node)) {

if (!possibleBipartitionDfs(nei, color ^ 1, graph, coloring))

return false;

}

return true;

}

Sentence Similarity II

Given two sentences words1, words2 (each represented as an array of strings), and a list of similar word pairs pairs, determine if two sentences are similar.

For example, words1 = [“great”, “acting”, “skills”] and words2 = [“fine”, “drama”, “talent”] are similar, if the similar word pairs are pairs = [[“great”, “good”], [“fine”, “good”], [“acting”,”drama”], [“skills”,”talent”]].

Solution: Two words are similar if they are the same, or there is a path connecting them from edges represented by pairs.

We can check whether this path exists by performing a depth-first search from a word and seeing if we reach the other word. The search is performed on the underlying graph specified by the edges in pairs.

Time Complexity: O(NP), where N is the maximum length of words1 and words2, and P is the length of pairs. Each of N searches could search the entire graph. You can also use Union-Find search to achieve Time Complexity: O(NlogP+P).

private Map<String, String> parents = new HashMap<>();

public boolean areSentencesSimilarTwo(String[] sentence1, String[] sentence2, List<List<String>> similarPairs) {

if (sentence1.length != sentence2.length) {

return false;

}

for (List<String> list : similarPairs) {

union(list.get(0), list.get(1), parents);

}

for (int i = 0; i < sentence1.length; i++) {

if (!find(sentence1[i]).equals(find(sentence2[i]))) {

return false;

}

}

return true;

}

private void union(String s1, String s2, Map<String, String> roots) {

String ps1 = find(s1);

String ps2 = find(s2);

if (!ps1.equals(ps2)) {

roots.put(s2, ps1); // halve path

roots.put(ps2, ps1);

}

}

private String find(String s) {

while (parents.containsKey(s)) {

s = parents.get(s);

}

return s;

}